Welcome to Ziyang’s Homepage!

I am currently a Postdoctoral Research Fellow at Boston Children’s Hospital (the world’s #1 ranked pediatric hospital), a primary teaching affiliate of Harvard Medical School working under the mentorship of Prof. Kaifu Chen. My research focuses on decoding gene regulatory relationships and reconstructing cellular lineage trajectories using graph-based machine learning and single-cell multi-omics data.

I received my Ph.D. from the School of Software at Tsinghua University (GPA: 4.0/4.0, Rank: 1/37), advised by Prof. Chaokun Wang. Before that, I was a postgraduate student in the College of Intelligence and Computing at Tianjin University, where I was advised by Prof. Di Jin and Prof. Dongxiao He.

Education & Experience

Sep. 2025 – Present 💼 Postdoctoral Research Fellow Harvard Medical School

Sep. 2021 – Jun. 2025 🎓 Ph.D. in Software Engineering Tsinghua University

Feb. 2019 – Aug. 2021 💼 Algorithm Engineer JD.com

Sep. 2012 – Jan. 2019 🎓 B.S./M.S. in Computer Science Tianjin University

Research

Graph data is ubiquitous, e.g., social networks, recommendation systems, molecular graphs. My research focuses on Graph Neural Network (GNN) and its applications. I have developed different methods for enhancing the capability of GNNs or graph contrastive learning from different aspects, including high-quality embedding, efficient storage & computation, and structure-preserving.

I am currently interested in leveraging GNNs and large language models to solve the challenges in biology 🧬.

Contact

The easiest way to reach me is email. My address is ziyang.liu@childrens.harvard.edu.

What’s New

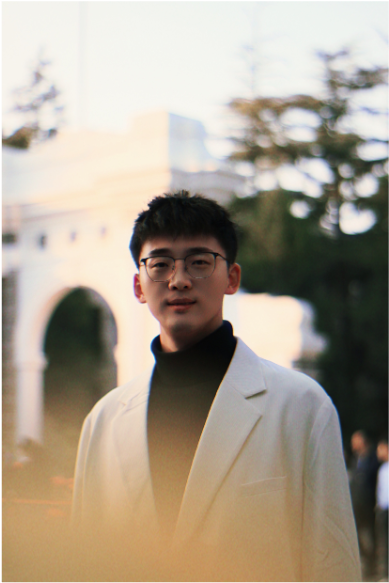

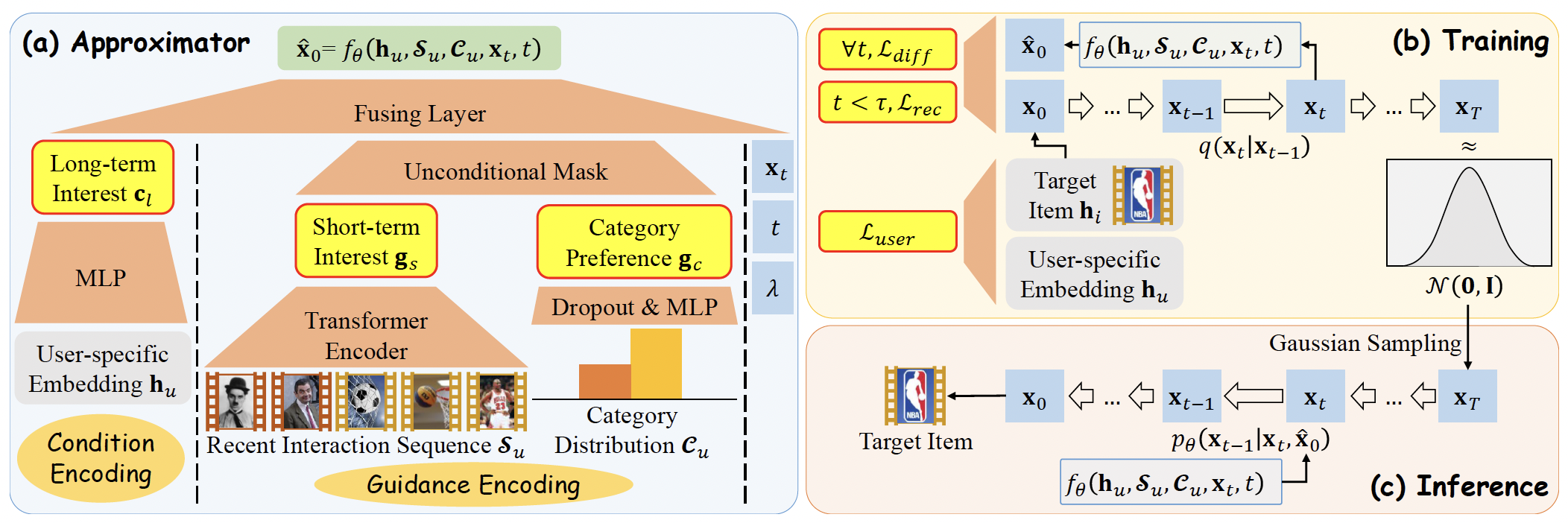

[Nov. 2025] 🌟 One paper “Molecular Motif Learning as a pretraining objective for molecular property prediction” has been published in Nature Communications!

Summary: MotiL is an unsupervised pretraining method excels on both small molecules and protein macromolecules. It learns chemically consistent molecular representations by preserving both scaffold-level and whole-molecule structure, enabling state-of-the-art performance in molecular property prediction across diverse benchmarks.

Paper Code

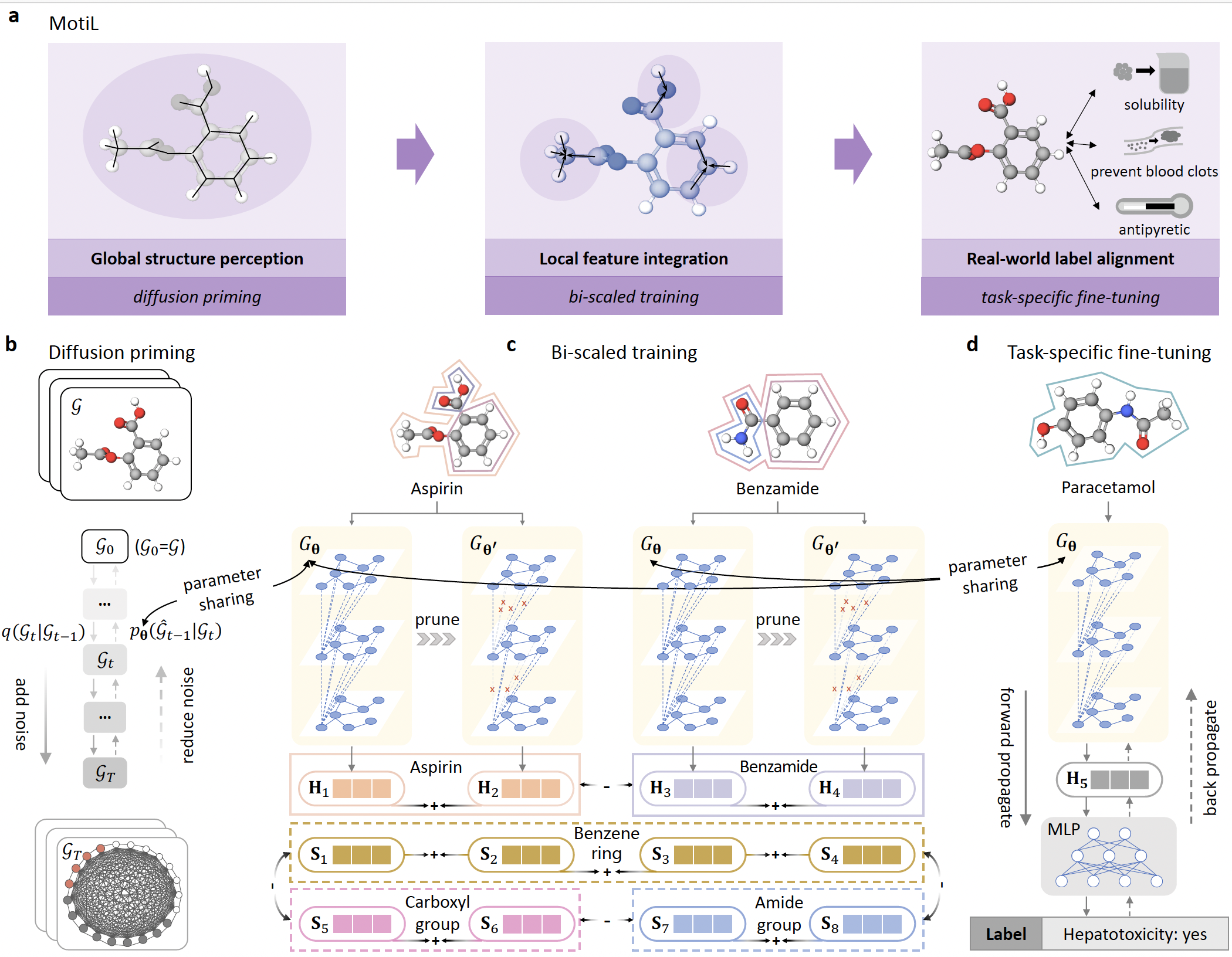

[Sep. 2025] 🤝 One paper “PLForge: Enhancing Language Models for Natural Language to Procedural Extensions of SQL” has been published at SIGMOD 2025!

Summary: PLForge is a family of pre-trained language models (3B–15B parameters) specifically designed for translating natural language to PL/SQL. It leverages a curated PL/SQL corpus, incremental pre-training, and a tailored prompt strategy, and demonstrates its superiority over existing models on a newly constructed NL-to-PL/SQL benchmark.

Paper Code

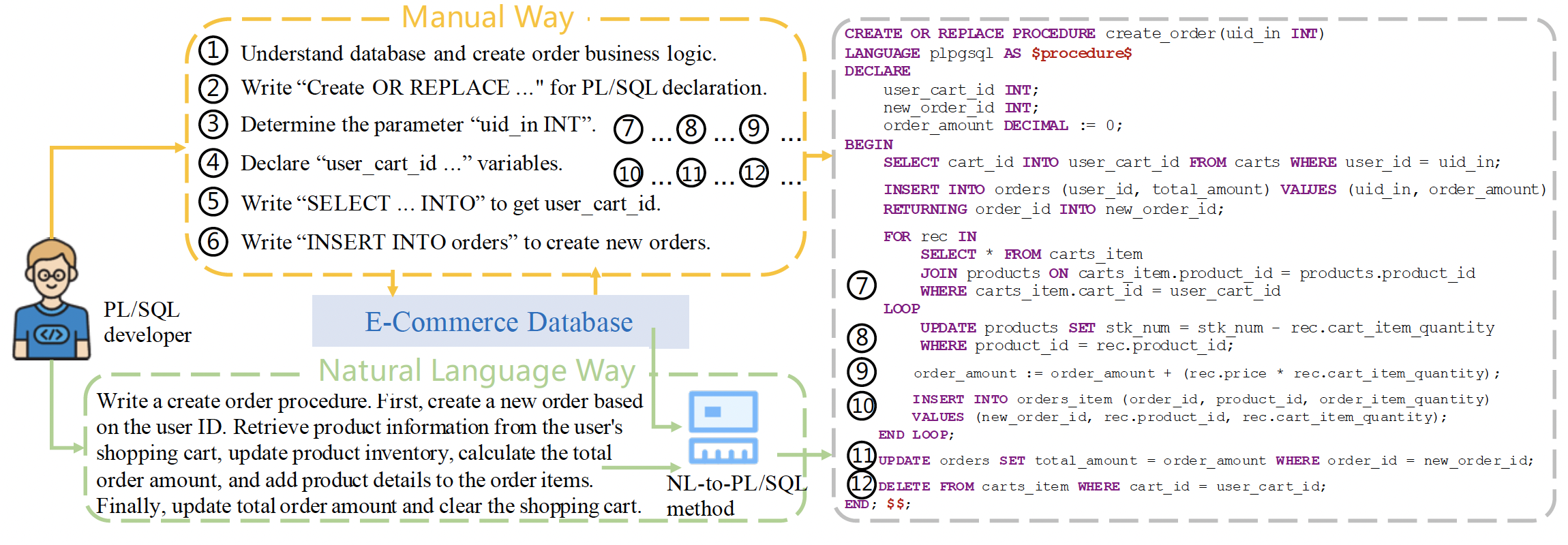

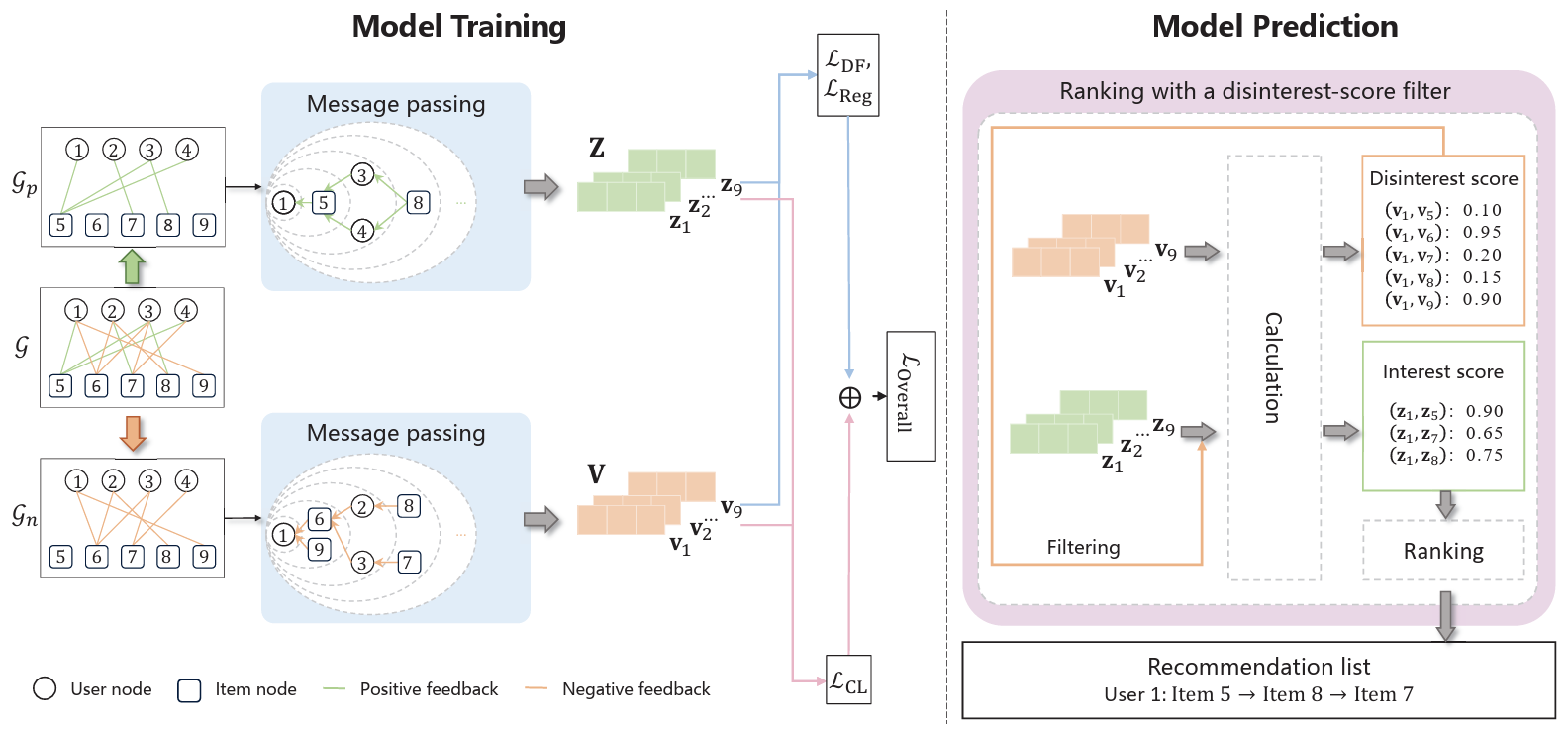

[Sep. 2025] 🤝 One paper “Negative Feedback Really Matters: Signed Dual-Channel Graph Contrastive Learning Framework for Recommendation” has been published at NeurIPS 2025!

Summary: SDCGCL is a model-agnostic framework that effectively leverages negative feedback via dual-channel modeling, cross-channel calibration, and adaptive prediction—boosting performance across graph-based recommenders with minimal overhead.

Paper Code

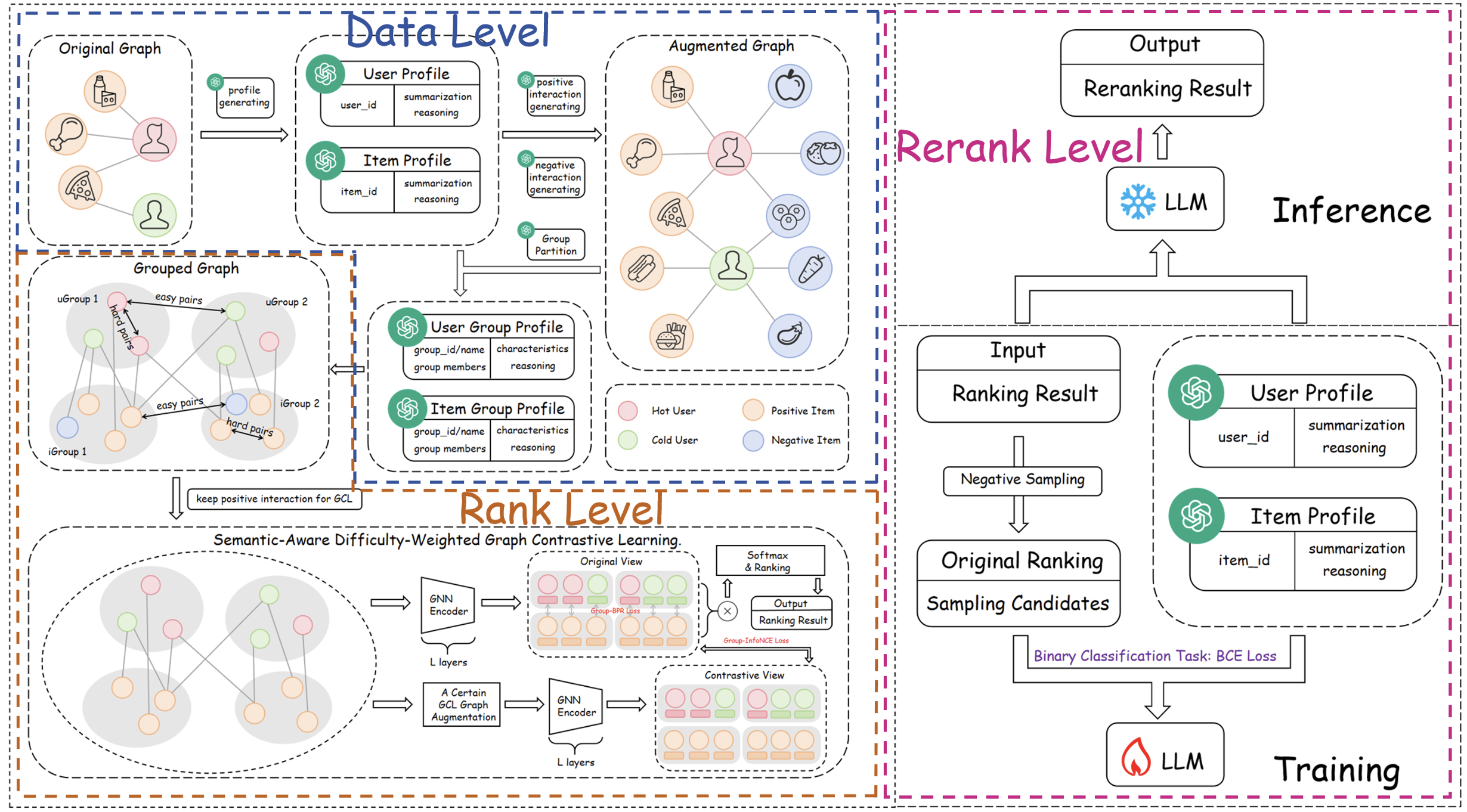

[Aug 2025] 🤝 One paper “LAGCL4Rec: WhenLLMsActivate Interactions Potential in Graph Contrastive Learning for Recommendation” has been published at EMNLP 2025 (findings)!

Summary: LAGCL4Rec addresses key limitations (sparse interactions, coarse negative sampling, and unbalanced preference modeling) in recommender systems by integrating LLMs into graph contrastive learning across data, rank, and rerank levels.

Paper Code

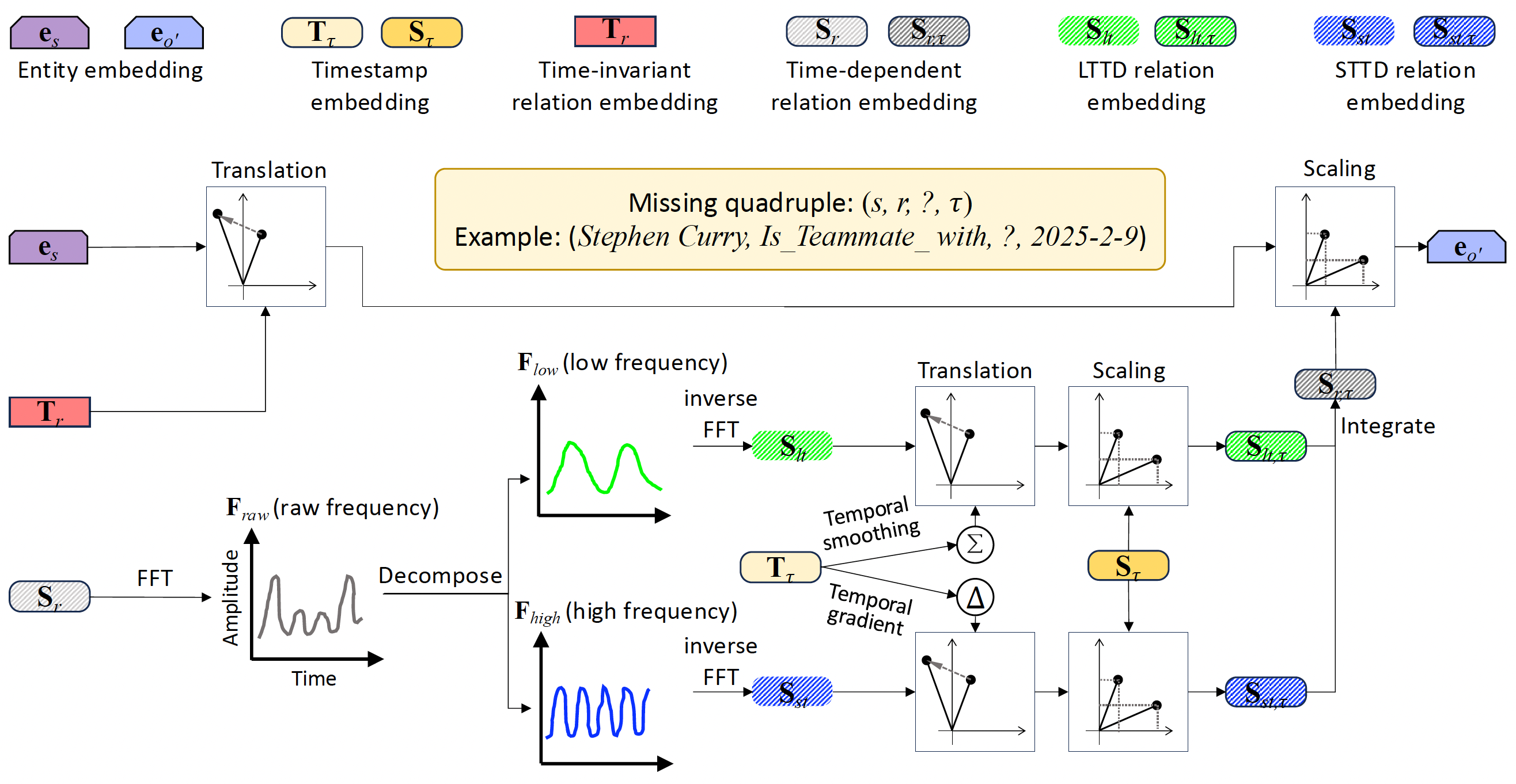

[Jun. 2025] 🌟 One paper “TeRDy: Temporal Relation Dynamics through Frequency Decomposition for Temporal Knowledge Graph Completion” has been published at ACL 2025 (main conference)!

Summary: TeRDy captures long- and short-term temporal dynamics by decomposing relations into low- and high-frequency components.

Paper Code

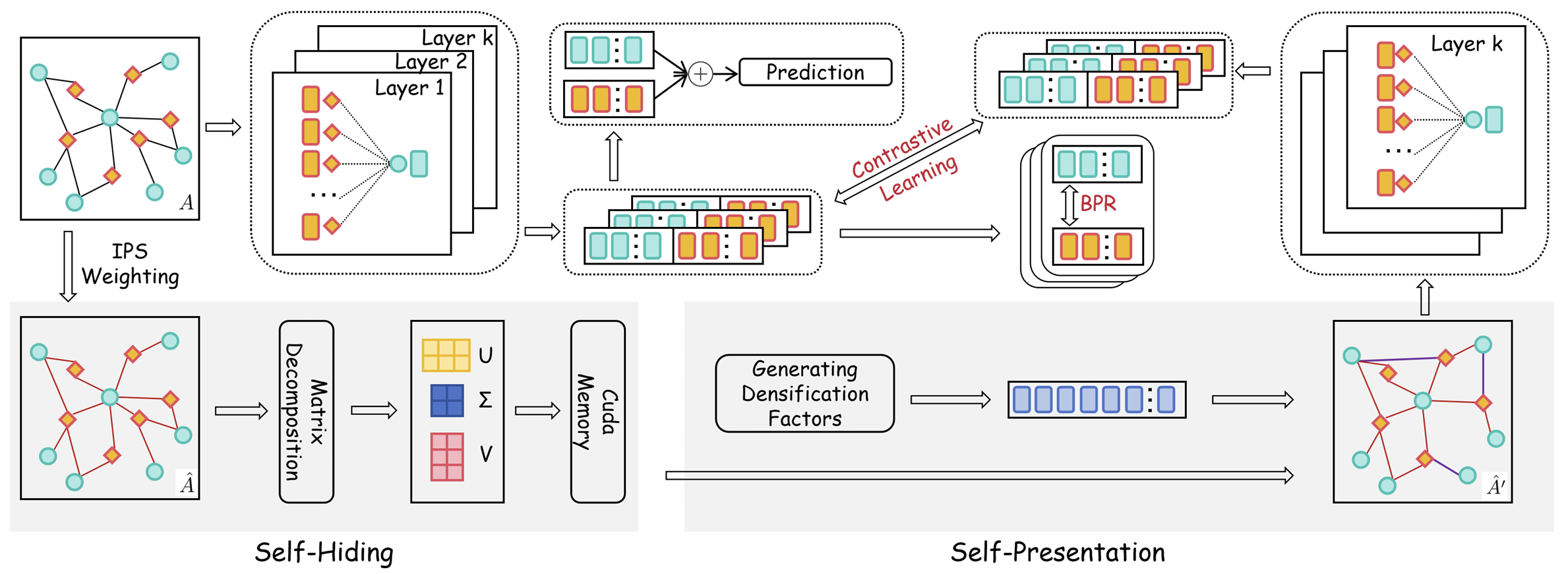

[Apr. 2025] 🤝 One paper “Balancing Self-Presentation and Self-Hiding for Exposure-Aware Recommendation Based on Graph Contrastive Learning” has been published at SIGIR 2025!

Summary: BPH4Rec integrates exposure-aware self-presentation and self-hiding mechanisms into graph contrastive learning for debiased recommendation.

Paper Code

[Dec. 2024] 🌟 One paper “Pone-GNN: Integrating Positive and Negative Feedback in Graph Neural Networks for Recommender Systems” has been published in ACM Transactions on Recommender Systems (ToRS)!

Summary: Pone-GNN unifies positive and negative feedback via dual embeddings and contrastive learning in GNN-based recommendation.

Paper Code

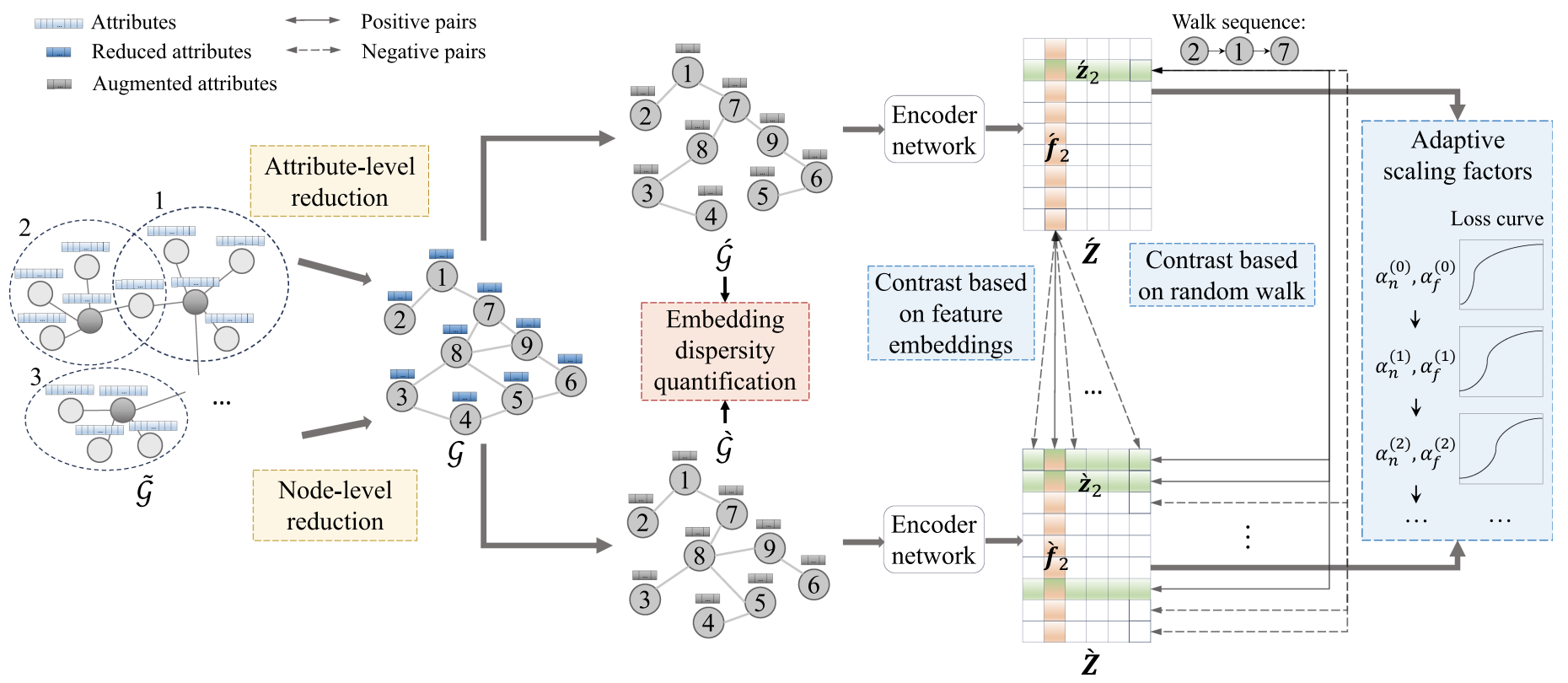

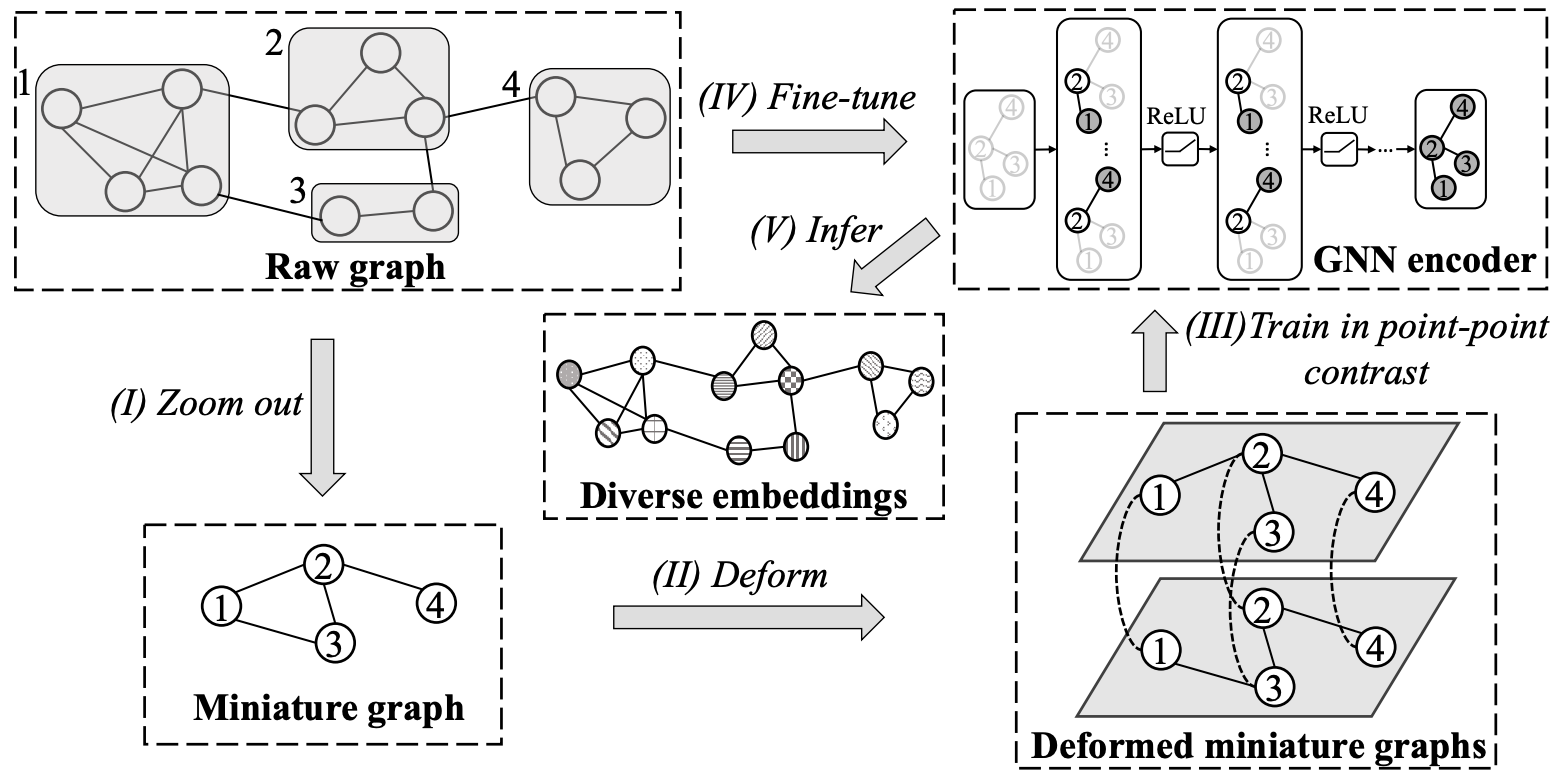

[Jul. 2024] 🌟 One paper “Efficient Unsupervised Graph Embedding with Attributed Graph Reduction and Dual-level Loss” has been published in IEEE Transactions on Knowledge and Data Engineering (TKDE)!

Summary: GEARED boosts efficiency and embedding quality via graph reduction and adaptive dual-level contrastive loss.

Paper Code

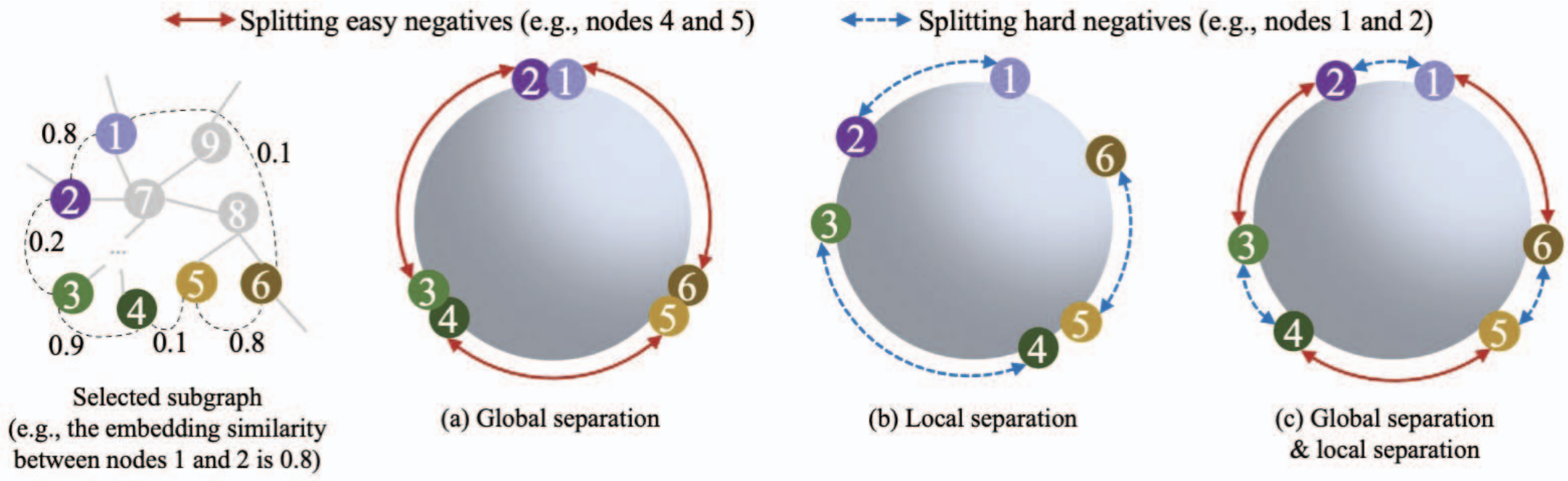

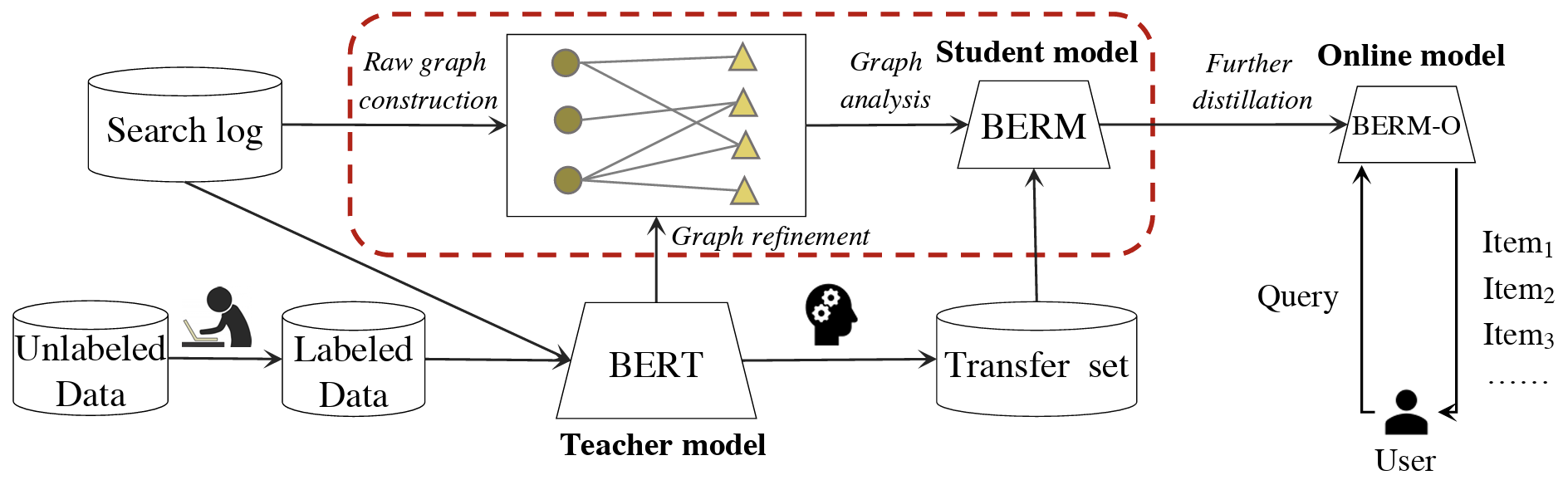

[Mar. 2024] 🌟 One paper “Incorporating Dynamic Temperature Estimation into Contrastive Learning on Graphs” has been published at ICDE 2024!

Summary: GLATE adaptively optimizes contrastive loss temperatures to enhance embedding quality and training efficiency in graph contrastive learning.

Paper Code

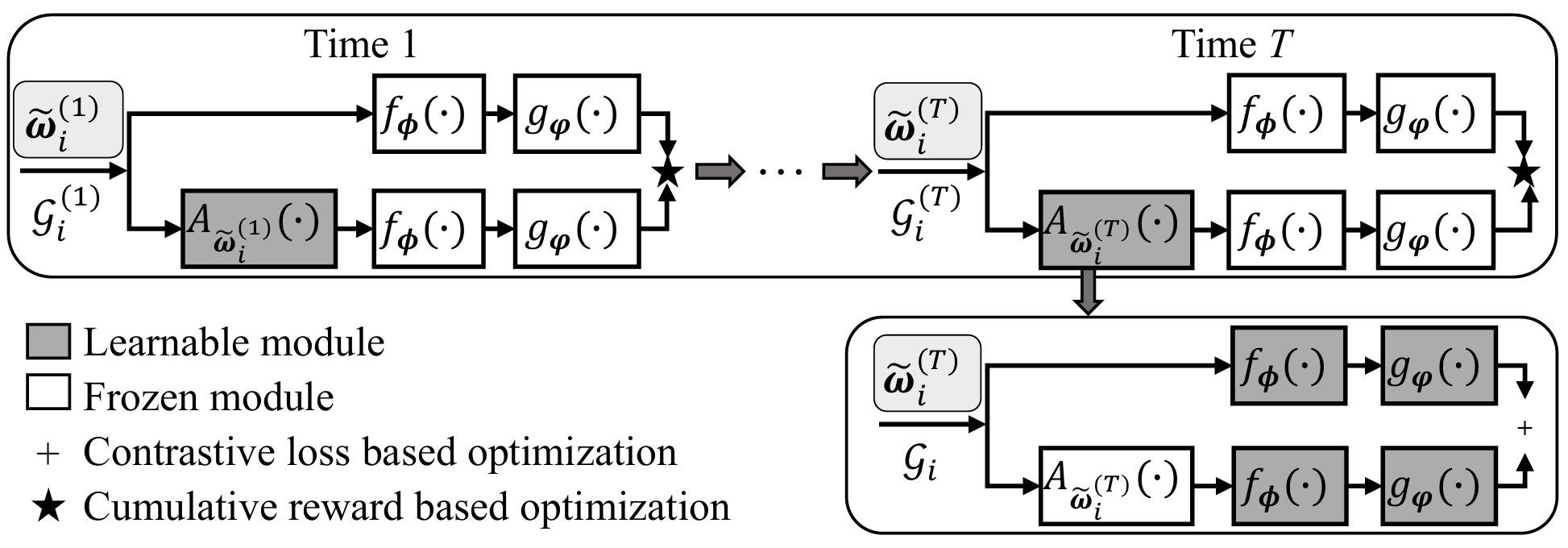

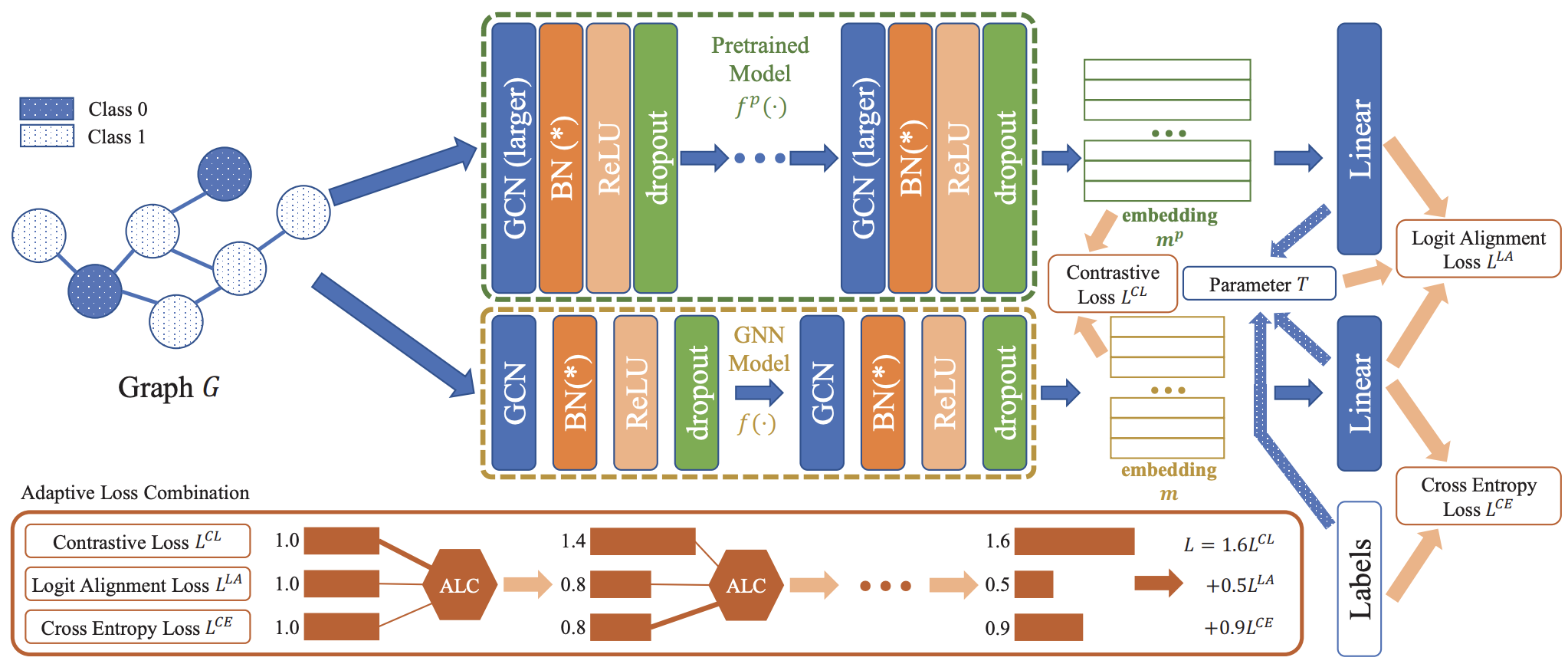

[Mar. 2024] 🤝 One paper “GraphHI: Boosting Graph Neural Networks for Large-Scale Graphs” has been published at ICDE 2024!

Summary: GraphHI enhances GNNs by dynamically integrating inter- and intra-model hidden insights with adaptive loss combination.

Paper

| 🌟 First author | 🤝 Co-author | 🔬 Corresponding author |